Overview

Background

ALS is an idiopathic progressive disease that dramatically reduces motor function, with much less impact on sensory functions. The annual incidence rate of ALS in the U.S. is about 5000 cases, with a prevalence of more than 16,000 individuals. More than 75% of individuals with ALS will ultimately require assistance for communication and control. Research has found that the loss of communication abilities is the main factor for more than 90% of individuals who decide to decline prolonging their lives on life-support. ALS patients in advanced stages are clinically classified into: 1) Locked-in State (LIS) in which patients maintain residual motor control, and 2) Complete LIS (CLIS), in which motor control is entirely lost.

To compensate for the lost motor abilities, ALS patients with mild to significant motor function loss commonly use Augmentative and Alternative Communication (AAC) devices for communication, and occasionally BCIs for control. AAC devices require some level of motor function, and thus are much less beneficial when the motor abilities of the patient decline severely. BCI systems can be either invasive (e.g. electrocorticography-ECoG) or non-invasive (e.g. electroencephalography-EEG) based. Invasive BCI systems require surgery and are not desirable due to potential risks and complications, such as neurologic and superficial infections, intracranial hemorrhage, elevated intracranial pressure, movement of electrodes, etc. Also, in advanced stages of ALS, the patient may not be able to provide consent for the surgery, hence raising ethical questions. However, most non-invasive strategies have substantially less spatial resolution than invasive methods, require training, and can be cumbersome to set up. Nevertheless, non-invasive EEG-BCIs allow for a very high temporal resolution, enabling the design of a real-time classification system.

BCIs allow individuals with minimal or no motor function to communicate and control devices by modulating their brain activity, eliminating the need for motor function. Currently, EEG-BCI systems are either stimulus-driven (bottom-up), or self-driven (i.e. voluntarily modifying neural activity, top down). Stimulus-driven EEG-BCIs usually rely on neural response to external stimuli (e.g. P300 paradigms or Steady State Visually Evoked Potentials), while self-driven EEG-BCIs rely on voluntary modulations of neural activity with minimal to no external stimulation (e.g. Motor Imagery paradigms). Stimulus-driven visual attention paradigms are difficult to use and rely heavily on visual cues and overt visual attention, i.e. looking directly at targets and stimuli. This presents a challenge for advanced ALS patients incapable of any motor function, including eye movements. Stimulus-driven paradigms have been tested with ALS patients (mainly in early stages) successfully. Self-driven paradigms require longer training, but if applied properly, are more intuitive to use and do not require any motor movement. An example of self-driven EEG-BCI is decoding the direction of Covert Visuospatial Attention (CVSA). CVSA paradigms do not require looking directly at a visual target (overt visuospatial attention). Instead, users are instructed to covertly attend to targets, without any eye, head, or neck movement, while fixating the focus of their gaze on a fixation point. CVSA paradigms show comparable classification accuracy against overt attention BCI paradigms, and they have been shown to generate almost the same pattern of brain activity over the visual cortex as overt attention to visual targets.

Currently, CVSA paradigms rely on visual displays and exogenous or endogenous cuing. With exogenous cuing, while the participant’s gaze is focused on the fixation point, a target appears directly at the location where the participant must covertly attend. With endogenous cuing, the cue appears at the fixation point and prompts the participant to covertly focus on one of the visual targets on the screen. CVSA paradigms have been tested, mainly on healthy participants, with promising results. Both these paradigms rely on external cues presented on a visual display.

To the best of our knowledge, decoding volitional shifts of CVSA in real-time through non-invasive EEG signals that rely on absolutely no external stimuli (endogenous or exogenous) has not yet been studied. Virtually nothing is known regarding how these volitional shifts affect the EEG signal, compared to other CVSA paradigms. An fMRI study by Gmeindl et al. helps our understanding of this function as they examined the role of volition in CVSA. While this MRI paradigm is not feasible for daily use by people with ALS in their living environments, this study identified loci of interest in the brain involved in volitional shifts of CVSA (many likely too small and/or too deep inside the brain to be studied with the low spatial resolution of EEG systems, e.g. medial superior parietal lobule, right middle frontal gyrus, basal ganglia, etc.). These identified loci are critical to understanding the underlying network of attending volitionally, and to our strategic monitoring of the high level more superficial neural activity of the occipital cortex. From a therapeutic and scientific knowledge perspective, learning about the effects of volition in modulations of neural activity over the parieto-occipital regions during CVSA shifts is essential in developing an EEG-BCI system that is sustainable and requires minimal equipment for operation and use by individuals incapable of performing any motor movements. In this project, we aim to develop an Absolutely Volitional – Covert Visuospatial Attention (AV-CVSA) paradigm, relying on no visual cues (endogenous or exogenous), as a novel approach to enabling BCI-EEG control.

Objectives

We propose an Absolutely Volitional CVSA (AV-CVSA) paradigm to achieve a comparable accuracy in detection of CVSA direction, without providing any endogenous or exogenous cues. We choose non-invasive EEG over invasive (but more spatially accurate) methods such as electrocorticography (ECoG), to reduce the risks associated with invasive methods. Despite their low spatial resolution, non-invasive EEG-BCIs are more temporally accurate than other types of BCI, enabling the feasibility of implementing a real-time system for control and communication with high portability. Based on a successful demonstration of early pilot data, our central hypothesis is that since the visual cortex of the brain has a relatively large representation in the parietal and occipital lobes, we can detect CVSA direction with higher accuracies, compared to paradigms such as Motor Imagery. We seek to investigate and identify practical data collection and analysis methods that can lead us to reach high classification accuracy rates and implement our findings to develop a novel real-time AV-CVSA direction classification system for communication and control.

Objective 1) Develop and optimize an offline system to classify AV-CVSA direction in healthy participants and compare its performance with CVSA direction classification paradigms that use endogenous or exogenous cues. We hypothesize that AV-CVSA will perform more optimally than existing CVSA classification paradigms, and that providing CVSA training will result in a higher classification performance.

Objective 2) Develop and optimize a real-time classification system to determine the spatial direction of AV-CVSA in real-time, for healthy participants and individuals with ALS with significant motor impairments and compare the performance of the real-time classification system with offline classification. We hypothesize that the real-time AV-CVSA paradigm will accurately select dichotomous choices with the same performance as the offline method. We also hypothesize that the real-time AV-CVSA classification system can be optimized for effective use by ALS patients.

Achieving the objectives of this project will uncover previously unknown information about the role of volition in classification of CVSA through surface EEG. Our study will also provide a necessary and uncomplicated means of basic control and communication, in real-time, for patients who may decide to discontinue living on life-support solely due to the lack of communication and control capabilities caused by neurological diseases.

Methods

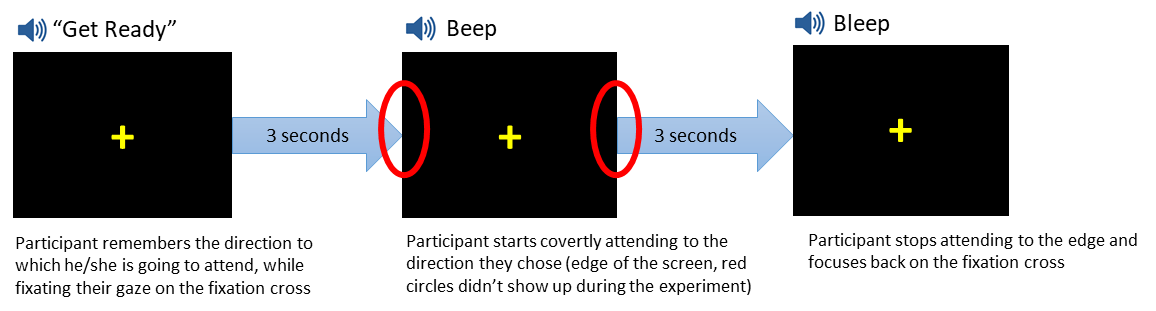

Preliminary studies: We collected EEG data from 4 healthy participants (ages 21-27), using our proposed AV-CVSA paradigm (providing no endogenous or exogenous cues for direction), to establish the feasibility of reliable AV-CVSA classification. We presented a fixation cross to our participants on a display in the center of their visual field. Participants told us before each trial a sequence of 3 directions to which they were going to attend (e.g. Left-Left-Right: LLR), and we recorded those directions in a chart. Each trial consisted of a sequence of 3 binaural auditory cues, with 3 seconds in between: Get Ready, Beep, and Bleep (Fig. 1). In each trial, the aforementioned sequence repeated 3 times. We instructed our participants to remember the directions to which they told us they will attend when they hear the Get Ready cue (e.g. L), then covertly attend to that direction while fixating their gaze on the fixation cross when they hear the Beep, and stop attending and focus on the fixation cross when they hear the Bleep.

Participants repeated the same process for the remaining directions (e.g. L and then R). We used neutral binaural auditory cues to avoid making the covert attention involuntary. Intertrial gap was controlled manually to avoid fatigue. To maintain consistency across different participants, we instructed them to covertly attend to the edges of the monitor for each direction. Participants were sitting in a neutral position, 66 inches away from the monitor, creating a 9.0-degree visual angle between the location of covert attention and the fixation cross. Overall, we recorded responses from each participant in 2 sessions: Session 1 (20 trials = 60 Ls & Rs), and Session 2 (40 trials = 120 Ls & Rs). We instructed our participants to avoid overtly looking at the target and to maintain the focus of their gaze on the fixation cross. We also instructed participants to inform the technician if they overtly looked at the target, blinked, or made any eye movements at all. We had no such incidents, and during the breaks and after data collection we confirmed protocol adherence with our participants.

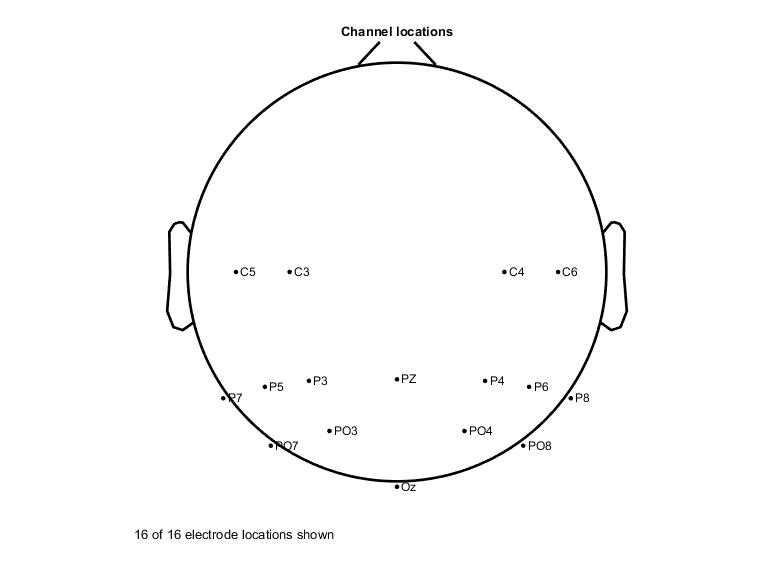

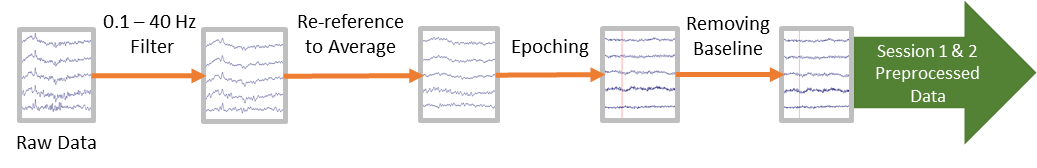

EEG data were collected from 16-channels (g.USBamp; G.TEC Medical Engineering GmbH, Austria) according to the extended 10-20 system, and electrodes were mainly placed on the parietal and parieto-occipital regions (Fig. 2). For data recording and stimulus presentation we used BCI2000 (National Center for Adaptive Neurotechnologies: NCAN, Albany, NY). Then, the data were pre-processed using EEGLAB (Swartz Center for Computational Neuroscience, La Jolla, CA) by filtering (0.1 – 40 Hz), re-referencing (to average of all electrodes), epoching (1 second before to 3 seconds after the beep), removing baseline (average activity from 1 second before the beep to the onset of the beep), and marking each epoch with the corresponding direction of attention (epochs for left and right), based on our chart (Fig. 3). All epochs from Session 1 for all participants were marked. A total of 10 different machine learning (ML) methods were used to process the data: Gradient Boosted Decision Trees (XGBoost), Linear Discriminant Analysis (LDA), Quadrant Discriminant Analysis (QDA), Decision Trees (DT), Naïve Bayesian (NB), Deep Neural Networks (DNN), Linear Regression (LR), Random Forests (RF), Support Vector Machines (SVM), and Convolutional Neural Networks (CNN). 80% of the epochs were randomly selected to train the classifier and the remaining 20% were used for testing, with 10-fold cross-validation.

We used a moving time-window (MTW) and averaged the electrical activity in each window, to represent that portion of the data. The MTW started from after the onset of the cue, and averaged the activity inside the window, then moved forward one step until the 3 seconds after the cue were covered. The length of MTWs were 500, 250, 125, and 62.5ms, with a step size of 31.25ms. Each window had at least a 50% overlap with the previous one to make sure the activity on the edges of each window is not going to be neglected. We generated accuracy graphs for each window (Fig. 4) to visualize the areas where the classification was consistently more accurate than 70% (i.e. accuracy of training on that particular time-window and testing on the same time-window from the test data).

We hypothesized that these periods can potentially provide better distinction between neural activities when the participant is covertly attending to the left vs. right. We named these regions High Distinction Periods (HDPs) and decided to only use the windows in the HDPs for training and classification to increase classifier performance.

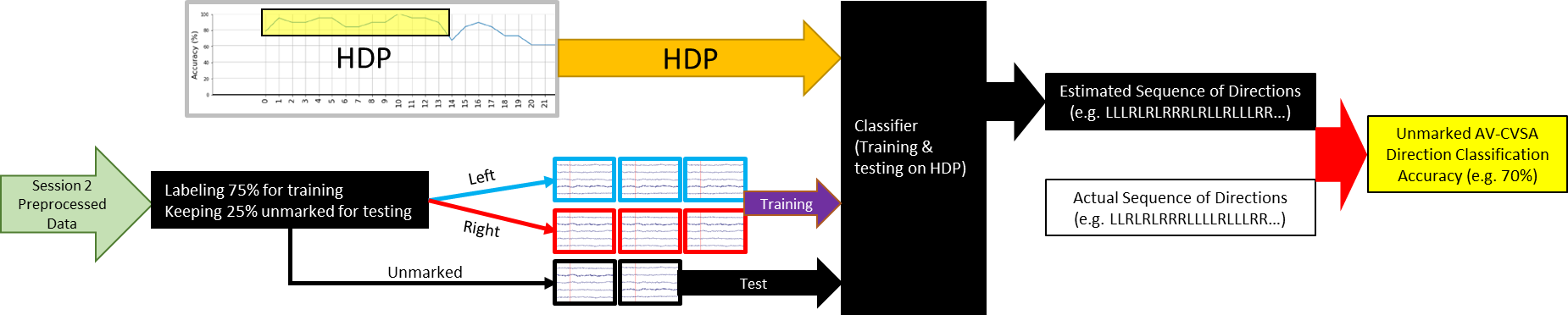

We marked 75% of the Session 2 data and used the HDPs to train our classifiers. The remaining 25% of the data was not marked, and was used as test. The classifier then generated a string of lefts and rights (e.g. LLRLRRRLLRLRLRLL…) which was compared to our chart to determine the accuracy rate (Fig. 5). Due to a human error in recording, we had to discard the data from one of the participants, for the remaining 3 we were able to classify the unknown data (to the program) with at least 70% accuracy across different participants (70 to 74.07%). This indicated that our aim of classifying AV-CVSA direction with high accuracy across all participants is feasible, but needs further investigation and optimization with a larger sample. In our next steps we aim to increase the classification performance by optimizing the classifiers, assess the role of training the participants in the overall task performance, optimize the data collection and classification pipeline for real-time use, and test our prototype with ALS patients to further optimize the system to address their communication and control needs.

Team

- University of Wisconsin-Milwaukee

- Roger O. Smith, PhD, OT, FAOTA Resna Fellow

- Wendy E. Huddleston, PT, PhD

- Jun Zhang, PhD

- Sabine Heuer, PhD, CCC-SLP

- Maysam M. Ardehali, BS, PhD Candidate

- Qussai M. Obiedat, MS, PhD Candidate

- Marquette University

- Sheikh Iqbal Ahamed, PhD

- Olawunmi George, MS

- National Center for Adaptive Neurotechnologies (NCAN)

- Theresa Vaughan, BA

- Medical College of Wisconsin

- Edgar A. DeYoe, PhD

- Adam S. Greenberg, PhD

- Medical College of Wisconsin – ALS Clinic

- Paul E. Barkhaus, MD, FAAN, FAANEM

- Serena Thompson, MD, PhD

- Dominic Fee, MD

Project Bibliography

Birbaumer N, Rana A. (2019). Brain–computer interfaces for communication in paralysis In: Casting Light on the Dark Side of Brain Imaging. Elsevier, p. 25-9. https://pubmed.ncbi.nlm.nih.gov/27539560/

Rowland LP, Shneider NA. (2001). Amyotrophic lateral sclerosis. N Engl J Med, 344(22):1688-700. https://pubmed.ncbi.nlm.nih.gov/11386269/

Oskarsson B, Gendron TF, Staff NP. (2018). Amyotrophic lateral sclerosis: an update for 2018. In: Mayo Clinic Proceedings; Elsevier, p. 1617-28. https://pubmed.ncbi.nlm.nih.gov/30401437/

The ALS Association. Who Gets ALS? [Internet]. cited 8/15/2019]. Available from: http://www.alsa.org/about-als/factsyou-should-know.html

Körner S, Siniawski M, Kollewe K, Rath KJ, Krampfl K, Zapf A, Dengler R, Petri S. (2013). Speech therapy and communication device: Impact on quality of life and mood in patients with amyotrophic lateral sclerosis. Amyotrophic Lateral Sclerosis and Frontotemporal Degeneration, 14(1):20-5. https://pubmed.ncbi.nlm.nih.gov/22871079/

The ALS Association. Communication Guide. [Internet]. cited 8/15/2019]. Available from: http://www.alsa.org/alscare/augmentative-communication/communication-guide.html

Beukelman D, Fager S, Nordness A. (2011). Communication support for people with ALS. Neurol Res Int, 714693. https://www.hindawi.com/journals/nri/2011/714693/

Brownlee A, Palovcak M. (2007). The role of augmentative communication devices in the medical management of ALS. NeuroRehabilitation, 22(6):445-50. https://pubmed.ncbi.nlm.nih.gov/18198430/

Albert SM, Rabkin JG, Del Bene ML, Tider T, O’Sullivan I, Rowland LP, Mitsumoto H. (2006). Wish to die in end-stage ALS. Neurology, 65(1):68-74. https://pubmed.ncbi.nlm.nih.gov/16009887/

Moss AH, Casey P, Stocking CB, Roos RP, Brooks BR, Siegler M. Home ventilation for amyotrophic lateral sclerosis patients: Outcomes, costs, and patient, family, and physician attitudes. Neurology, 43(2):438-43. https://pubmed.ncbi.nlm.nih.gov/8437718/

Moss AH, Oppenheimer EA, Casey P, Cazzolli PA, Roos RP, Stocking CB, Siegler M. Patients with amyotrophic lateral sclerosis receiving long-term mechanical ventilation: Advance care planning and outcomes. Chest. 1996;110(1):249-55. https://pubmed.ncbi.nlm.nih.gov/8681635/

Maessen M, Veldink JH, van den Berg, Leonard H, Schouten HJ, van der Wal G, Onwuteaka-Philipsen BD. Requests for euthanasia: Origin of suffering in ALS, heart failure, and cancer patients. J Neurol. 2010;257(7):1192-8. https://pubmed.ncbi.nlm.nih.gov/20148336/

Pitt KM, Brumberg JS. Guidelines for feature matching assessment of brain–computer interfaces for augmentative and alternative communication. American journal of speech-language pathology. 2018;27(3):950-64. https://pubmed.ncbi.nlm.nih.gov/29860376/

Sellers EW, Vaughan TM, Wolpaw JR. A brain-computer interface for long-term independent home use. Amyotrophic Lat Scler. 2010;11(5):449-55. https://pubmed.ncbi.nlm.nih.gov/20583947/

Bensch M, Martens S, Halder S, Hill J, Nijboer F, Ramos A, Birbaumer N, Bogdan M, Kotchoubey B, Rosenstiel W. Assessing attention and cognitive function in completely locked-in state with event-related brain potentials and epidural electrocorticography. Journal of neural engineering. 2014;11(2):026006. https://pubmed.ncbi.nlm.nih.gov/24556584/

Beauchamp MS, Petit L, Ellmore TM, Ingeholm J, Haxby JV. A parametric fMRI study of overt and covert shifts of visuospatial attention. Neuroimage. 2001;14(2):310-21. https://pubmed.ncbi.nlm.nih.gov/11467905/

Astrand E, Wardak C, Ben Hamed S. Selective visual attention to drive cognitive brain–machine interfaces: From concepts to neurofeedback and rehabilitation applications. Frontiers in systems neuroscience. 2014;8:144. https://www.frontiersin.org/articles/10.3389/fnsys.2014.00144/full

Tonin L, Leeb R, Sobolewski A, del R Millán J. An online EEG BCI based on covert visuospatial attention in absence of exogenous stimulation. Journal of neural engineering. 2013;10(5):056007. https://pubmed.ncbi.nlm.nih.gov/23918205/

Marchetti M, Piccione F, Silvoni S, Gamberini L, Priftis K. Covert visuospatial attention orienting in a brain-computer interface for amyotrophic lateral sclerosis patients. Neurorehabil Neural Repair. 2013;27(5):430-8. https://pubmed.ncbi.nlm.nih.gov/23353184/

Coull JT, Frith C, Büchel C, Nobre A. Orienting attention in time: Behavioural and neuroanatomical distinction between exogenous and endogenous shifts. Neuropsychologia. 2000;38(6):808-19. https://pubmed.ncbi.nlm.nih.gov/10689056/

Arya R, Mangano FT, Horn PS, Holland KD, Rose DF, Glauser TA. Adverse events related to extraoperative invasive EEG monitoring with subdural grid electrodes: A systematic review and meta-analysis. Epilepsia. 2013;54(5):828-39. https://pubmed.ncbi.nlm.nih.gov/23294329/

Shenoy P, Miller KJ, Ojemann JG, Rao RP. Generalized features for electrocorticographic BCIs. IEEE Transactions on Biomedical Engineering. 2007;55(1):273-80. https://ieeexplore.ieee.org/document/4360075

Burle B, Spieser L, Roger C, Casini L, Hasbroucq T, Vidal F. (2015). Spatial and temporal resolutions of EEG: Is it really black and white? A scalp current density view. International Journal of Psychophysiology 97(3):210-20. https://www.sciencedirect.com/science/article/pii/S0167876015001865#:~:text=Conclusions,EEG%2C%20that%20is%20scalp%20potentials.

Fried-Oken M, Fox L, Rau MT, Tullman J, Baker G, Hindal M, Wile N, Lou J. (2006). Purposes of AAC device use for persons with ALS as reported by caregivers. Augmentative and Alternative Communication, 22(3):209-21. https://pubmed.ncbi.nlm.nih.gov/17114164/

Wolpaw, J, Wolpaw, EW. (2012). Brain-computer interfaces: principles and practice. OUP USA. https://oxford.universitypressscholarship.com/view/10.1093/acprof:oso/9780195388855.001.0001/acprof-9780195388855

Haselager P, Vlek R, Hill J, Nijboer F. (2009). A note on ethical aspects of BCI. Neural Networks, 22(9):1352-7. https://www.neurotechcenter.org/sites/default/files/misc/A%20note%20on%20ethical%20aspects%20of%20BCI.pdf

Orhan U, Hild KE, Erdogmus D, Roark B, Oken B, Fried-Oken M. (2012). RSVP keyboard: An EEG based typing interface. In: 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE; 2012. p. 645-8. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3771530/

Carrasco M. (2011). Visual attention: The past 25 years. Vision Res, 51(13):1484-525. https://pubmed.ncbi.nlm.nih.gov/21549742/

Fried-Oken M, Howard J, Stewart SR. (1991). Feedback on AAC intervention from adults who are temporarily unable to speak. Augmentative and Alternative Communication, 7(1):43-50. https://www.tandfonline.com/doi/abs/10.1080/07434619112331275673

Brunner P, Joshi S, Briskin S, Wolpaw JR, Bischof H, Schalk G. (2010). Does the ‘P300’speller depend on eye gaze? Journal of neural engineering, 27(5):056013. https://pubmed.ncbi.nlm.nih.gov/20858924/#:~:text=This%20study%20investigated%20the%20extent,BCI%20depends%20on%20eye%20gaze.&text=The%20results%20show%20that%20the,considerable%20measure%20on%20gaze%20direction.

Leeb R, Perdikis S, Tonin L, Biasiucci A, Tavella M, Creatura M, Molina A, Al-Khodairy A, Carlson T, dR Millán J. (2013). Transferring brain–computer interfaces beyond the laboratory: Successful application control for motor-disabled users. Artif Intell Med, 59(2):121-32. https://pubmed.ncbi.nlm.nih.gov/24119870/

Xu M, Wang Y, Nakanishi M, Wang Y, Qi H, Jung T, Ming D. (2016). Fast detection of covert visuospatial attention using hybrid N2pc and SSVEP features. Journal of neural engineering,13(6):066003. https://pubmed.ncbi.nlm.nih.gov/27705952/

Theeuwes J. (1991). Exogenous and endogenous control of attention: The effect of visual onsets and offsets. Percept Psychophys, 49(1):83-90. https://pubmed.ncbi.nlm.nih.gov/2011456/

Tonin L, Leeb R, Del R Millán J. (2012). Time-dependent approach for single trial classification of covert visuospatial attention. Journal of neural engineering. 9(4):045011. https://pubmed.ncbi.nlm.nih.gov/22832204/

Andersson P, Ramsey NF, Raemaekers M, Viergever MA, Pluim JP. (2012). Real-time decoding of the direction of covert visuospatial attention. Journal of neural engineering 9(4):045004. https://pubmed.ncbi.nlm.nih.gov/22831959/

Bahramisharif A, Van Gerven M, Heskes T, Jensen O. (2010). Covert attention allows for continuous control of brain–computer interfaces. Eur J Neurosci 31(8):1501-8. https://pubmed.ncbi.nlm.nih.gov/20525062/#:~:text=Covert%20attention%20is%20the%20act,stimulus%20without%20changing%20gaze%20direction.&text=Our%20findings%20demonstrate%20that%20modulations,control%20in%20a%20BCI%20setting.

Thut G, Nietzel A, Brandt SA, Pascual-Leone A. (2006). Alpha-band electroencephalographic activity over occipital cortex indexes visuospatial attention bias and predicts visual target detection. J Neurosci 26(37):9494-502. https://pubmed.ncbi.nlm.nih.gov/16971533/

Gmeindl L, Chiu Y, Esterman MS, Greenberg AS, Courtney SM, Yantis S. (2016). Tracking the will to attend: Cortical activity indexes self-generated, voluntary shifts of attention. Attention, Perception, & Psychophysics, 78(7):2176-84. https://pubmed.ncbi.nlm.nih.gov/27301353/

Bos DP, Duvinage M, Oktay O, Saa JD, Guruler H, Istanbullu A, Van Vliet M, Van De Laar B, Poel M, Roijendijk L. (2011). Looking around with your brain in a virtual world. In: 2011 IEEE Symposium on Computational Intelligence, Cognitive Algorithms, Mind, and Brain (CCMB) IEEE; 2011. p. 1-8. https://ieeexplore.ieee.org/document/5952110

Treder MS, Bahramisharif A, Schmidt NM, Van Gerven MA, Blankertz B. (2011). Brain-computer interfacing using modulations of alpha activity induced by covert shifts of attention. Journal of neuroengineering and rehabilitation 8(1):24. https://jneuroengrehab.biomedcentral.com/articles/10.1186/1743-0003-8-24

Blum A, Kalai A, Langford J. (1999). Beating the hold-out: Bounds for k-fold and progressive cross-validation. In: COLT, p. 203-8. https://www.ri.cmu.edu/pub_files/pub1/blum_a_1999_1/blum_a_1999_1.pdf

Kübler A, Neumann N, Wilhelm B, Hinterberger T, Birbaumer N. Predictability of brain-computer communication. Journal of Psychophysiology. 2004;18(2/3):121-9. https://www.researchgate.net/publication/232506335_Predictability_of_Brain-Computer_Communication

Kübler A, Birbaumer N. Brain–computer interfaces and communication in paralysis: Extinction of goal directed thinking in completely paralysed patients? Clinical neurophysiology. 2008;119(11):2658-66. https://pubmed.ncbi.nlm.nih.gov/18824406/

Cunningham P. Dimension Reduction In: Cord M, Cunningham P, editors. Machine Learning Techniques for Multimedia: Case Studies on Organization and Retrieval. Berlin, Heidelberg: Springer Berlin Heidelberg; 2008; p. 91-112. Available from: https://doi.org/10.1007/978-3-540-75171-7_4

Agrawal P, Bhargavi D, Han X, Tevathia N, Popa A, Ross N, Woodbridge DM, Zimmerman-Bier B, Bosl W. A Scalable Automated Diagnostic Feature Extraction System for EEGs. In: 2019 IEEE 43rd Annual Computer Software and Applications Conference (COMPSAC) ; 2019IEEE; 2019. p. 446-51. https://repository.usfca.edu/cgi/viewcontent.cgi?article=1158&context=nursing_fac

Singh A, Thakur N, Sharma A. A review of supervised machine learning algorithms. In: 2016 3rd International Conference on Computing for Sustainable Global Development (INDIACom) ; 2016IEEE; 2016. p. 1310-5. https://ieeexplore.ieee.org/abstract/document/7724478

Chen T, Guestrin C. Xgboost: A scalable tree boosting system. In: Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining ; 2016ACM; 2016. p. 785-94.

Cecotti H, Graeser A. Convolutional neural network with embedded Fourier transform for EEG classification. In: 2008 19th International Conference on Pattern Recognition, IEEE; 2008. p. 1-4. https://ieeexplore.ieee.org/document/4761638

Lawhern VJ, Solon AJ, Waytowich NR, Gordon SM, Hung CP, Lance BJ. (2018). EEGNet: A compact convolutional neural network for EEG-based brain–computer interfaces. Journal of neural engineering,15(5):056013. https://iopscience.iop.org/article/10.1088/1741-2552/aace8c/meta

Schirrmeister RT, Springenberg JT, Fiederer LDJ, Glasstetter M, Eggensperger K, Tangermann M, Hutter F, Burgard W, Ball T. (2017). Deep learning with convolutional neural networks for EEG decoding and visualization. Hum Brain Map 38(11):5391-420. https://pubmed.ncbi.nlm.nih.gov/28782865/

Acharya UR, Oh SL, Hagiwara Y, Tan JH, Adeli H, Subha DP. (2018). Automated EEG-based screening of depression using deep convolutional neural network. Comput Methods Programs Biomed 161:103-13. https://www.sciencedirect.com/science/article/abs/pii/S0169260718301494

Sors A, Bonnet S, Mirek S, Vercueil L, Payen J. (2018). A convolutional neural network for sleep stage scoring from raw single-channel EEG. Biomedical Signal Processing and Control 42:107-14. https://www.sciencedirect.com/science/article/abs/pii/S1746809417302847

Hajinoroozi M, Mao Z, Jung T, Lin C, Huang Y. (2016). EEG-based prediction of driver’s cognitive performance by deep convolutional neural network. Signal Process Image Commun 47:549-55. https://www.sciencedirect.com/science/article/abs/pii/S0923596516300832

Thiery T, Lajnef T, Jerbi K, Arguin M, Aubin M, Jolicoeur P. (2016). Decoding the locus of covert visuospatial attention from EEG signals. PloS one 11(8):e0160304. https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0160304

Posner M,I., Snyder C, R., Davidson B, J. (1980). Attention and the detection of signals. J Exp Psychol: Gen 109(2):160. https://pubmed.ncbi.nlm.nih.gov/7381367/

Albares M, Criaud M, Wardak C, Nguyen SCT, Ben Hamed S, Boulinguez P. (2011). Attention to baseline: Does orienting visuospatial attention really facilitate target detection? J Neurophysiol 106(2):809-16. https://pubmed.ncbi.nlm.nih.gov/21613585/

Ibos G., Duhamel J., Hamed, S.B. (2009). The spatial and temporal deployment of voluntary attention across the visual field. PLoS One 4(8):e6716. https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0006716

Prinzmetal, W., McCool, C., Park S. (2005). Attention: Reaction time and accuracy reveal different mechanisms. J Exp Psychol: Gen.134(1):73. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3977878/

Anton-Erxleben K, Carrasco M. (2013). Attentional enhancement of spatial resolution: Linking behavioural and neurophysiological evidence. Nature Reviews Neuroscience 4(3):188. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3977878/

Carrasco M. Yeshurun Y. Covert attention effects on spatial resolution. Prog Brain Res. 2009;176:65-86. https://pubmed.ncbi.nlm.nih.gov/19733750/

Van Rossum G. Python Programming Language. In: USENIX annual technical conference; 2007. p. 36. https://www.usenix.org/conference/2007-usenix-annual-technical-conference/presentation/python-programming-language

Woolley SC, York MK, Moore DH, Strutt AM, Murphy J, Schulz PE, Katz JS. (2010). Detecting frontotemporal dysfunction in ALS: Utility of the ALS cognitive behavioral screen (ALS-CBS™). Amyotrophic Lat Scler 11(3):303-11. https://pubmed.ncbi.nlm.nih.gov/20433413/

Armon C. How are ALS Functional Rating Scale (ALSFRS) scores interpreted in the assessment of amyotrophic lateral sclerosis (ALS)? [Internet]. cited 9/26/2019]. Available from: https://www.medscape.com/answers/1170097-81928/howare-als-functional-rating-scale-alsfrs-scores-interpreted-in-the-assessment-of-amyotrophic-lateral-sclerosis-als