Synthesized speech technology has given a voice to many who can’t speak.

But that gift may not offer them a “voice” of their own.

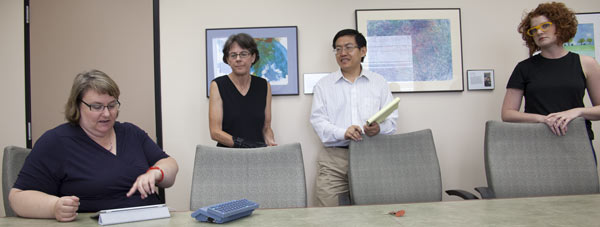

Four University of Wisconsin-Milwaukee researchers will be exploring the issues and challenges faced by those using synthesized speech. The researchers bring different perspectives to the project, which is funded by a $200,000 Center for 21st Century Studies Interdisciplinary Challenge Award.

In this video, see and hear how voice synthesizers work.

View full size on YouTube

Shelley Lund is an associate professor of communication sciences and disorders; Patricia Mayes is an associate professor of English with training in linguistics; Heather Warren-Crow is an assistant professor of art theory and practice in the Peck School of the Arts; and Yi Hu is an assistant professor of electrical engineering and computer science.

Augmentative and Alternative Communication (AAC) technologies, developed in the 1970s and early 1980s, allow an individual to type what they want to say into a voice synthesizer, press a button and turn their typed words into spoken words.

When the technology was first developed for use in communication disorders, says Lund, special devices were needed. The technology expanded with the development of voices for ATMs and computerized voicemail that could be adapted for those with communication disorders. Today, synthesized voices can be downloaded to iPads, smart phones and other mobile devices.

When the ATM voice is yours

While the technology has improved from early models that sounded like a robot speaking to more natural-sounding voices, says Lund, the AAC technologies have limits. For example, there are only a few “voices” to choose from.

It’s not unusual, says Lund, for a group of women with communication disorders to get together and find they’re all using the “Betty” voice, listening to others who sound exactly like them. Or, adds Warren-Crow, “What happens when you hear the ATM, and it’s the same voice you’re using?”

“Past research has been focused on intelligibility of the voices, and, given the quality of the voices we had access to in the past, it was important to make them easier to understand,” says Lund. “Now that the overall quality of the voices has improved, we can turn our attention to other issues.

“Emotion is arguably the most challenging aspect of the voice to convey through synthesis,” says Warren-Crow. So while some programs have added laughter, it’s still hard to convey sarcasm and other affective aspects, she adds. Slang, dialects and proper names can also be a challenge for voice synthesizers.

“We want to look at the issues people using AAC technologies face – how the use of specific available voices affects their identity and their dialog with others,” adds Mayes.

Our voices, our selves?

In their project, the researchers will be interviewing people who use various types of synthesizers to get views on how the voice they choose impacts their perception of themselves, and look at what vocal options they might like to see. They will also be recording actual interactions between speakers, and looking at the different ways they adapt to their synthesized voices.

For example, one of the areas Mayes researches is how people express various socially relevant meanings that are conveyed through regular patterns in utterances and gestures, often at an unconscious level. “What we don’t know is how users of synthesized voices are able to express these more subtle meanings,” she says.

Voice technology engineers have already begun to create voices that reflect various cultures and nationalities. For example, a voice called “Heather” speaks with a Scottish accent. The UWM researchers want to look at how important these distinct voices are to those using them, and how voices with different dialects and accents can be created while avoiding stereotyping.

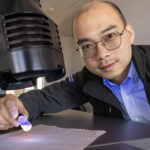

Researchers will refine and perhaps expand the scope of a tablet application they are developing under Hu’s leadership. “We want to see if we can convert what we learn into practical applications for devices like smart phones” he says. With the iPhone already having “Siri – a speechified voice,” in general use, developing different smart phone voices for those who need them is possible, he adds.

“Can synthesized voices convey what people feel are their identities?” asks Warren-Crow. “We don’t know.” To get feedback on their project, and beta-test the application, she will create a piece of “sound art” for the community, performed by people with and without communication disabilities.

This project is a good fit for transdisciplinary work, according to the researchers. Mayes and Warren-Crow accompanied Lund to an AAC conference in late July so they could learn more about the field and make contacts with people who could contribute to the project.

“Our methodologies and approaches are different,” says Warren-Crow of the research team. However, the combination of social sciences, humanities, arts and engineering is well-suited to the project’s goal of exploring what having a voice means, and studying the more flexible and aesthetically pleasing options developed for those using synthetic voice technology, she adds.

“The approach we’re taking with this grant is to start from the perspective of the humanities, using scientific and artistic methodologies,” says Mayes. “We’re bringing a humanities approach to devices created by engineers and designers.”